By Dr. Christopher Kent

A vivid memory of my chiropractic college days is a guest lecture on technique. The year was 1972. The doctor delivering the lecture was a well known “expert from afar.” It was made clear that we were privileged to have such a distinguished clinician share his experiences with us. The doctor presented a few cases, illustrated with pre and post radiographs. Of course, there were no controls. We were asked to accept his recommendations based upon two “indisputable” facts:

1. He was rich. His slide show was sprinkled with photographs of his twin engine aircraft and fleet of Mercedes-Benz automobiles. The message was simple: “If what I do didn’t work, patients wouldn’t come to me and I wouldn’t be rich.”

2. He knew it worked! The “it” was the specific procedure being promoted. What did he mean by “worked?” See item 1!

I left feeling buffaloed and bewildered, but the applause from the students and faculty was deafening. I was going to ask a few questions, but realized that little productive would result from such an action. Apparently this was the nature of chiropractic “research.”

In 1975, I was privileged to be among 16 chiropractors selected to participate in the NINCDS Workshop on the Research Status of Spinal Manipulative Therapy conducted under the auspices of the National Institutes of Health. Distinguished basic scientists, medical, and osteopathic clinicians were present. The objective was simply to determine what was and was not known in the basic and clinical sciences. M.D.s, D.O.s, and D.C.s were speaking to one another. There was genuine interest in chiropractic philosophy and techniques. I saw a glimmer of hope.

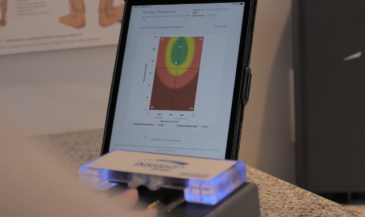

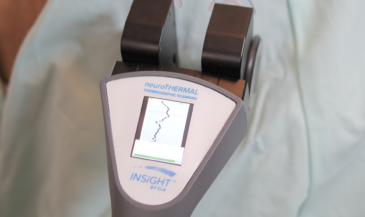

We still have a long way to go. The blustering and buffoonery of 20 years ago is alive and well. I recently spoke with a chiropractor concerning paraspinal EMG scanning. Initially, I welcomed his comments. But soon deja vu was playing in my mind. The doctor in question never used paraspinal EMG scanning in clinical practice. Nor did any members of the group he claimed to represent. His logic was reminiscent of the technique peddlers of my student days:

1. He is rich! Most of our 30-minute conversation with this doctor was devoted to listening to him boast about his clinic, his income, and his material possessions. He had specific figures memorized. He made disparaging remarks about his colleagues who practiced in 1,200-square foot offices and published case studies. He of course, has published nothing. Apparently his judgement is impeccable because he’s rich. He also claimed close ties to the insurance industry!

2. He knows some medical doctors. A skilled name dropper, he mentioned the name of a well-known surgeon he “works with.” Of course, the M.D. in question has not been involved in researching paraspinal EMG scanning. But, no matter, if he knows an M.D., his opinion must be valid.

3. He has a friend who co-authored a book about something else. Of course, this author has no experience in paraspinal EMG scanning either. But no matter, his friend wrote a book about something else, so he’s an expert.

4. He did a sloppy review of literature and found little relevant information. Using the proper key words means the difference between a “hit” and a “miss” when performing a Medline search. Having some familiarity with relevant journals and prominent researchers helps. Finally, it is important to realize that most chiropractic science journals are not currently indexed on Medline. As the old computer cliche’ goes, “garbage in-garbage out.” He missed the 300+ SEMG articles indexed in Medline.

Doctor three put the first two to shame. He simply stated that he didn’t want any information from science journals concerning EMG scanning. He said if he was sent any, he would not read it. My response would be, “OK. He’s not interested.” But the plot thickens…this doctor is on a state examining board! He has the temerity to judge a procedure he refuses to even read about.

The first doctor “knew” his technique “worked” in the absence of research findings. The second doctor felt he could “blow out of the water in three minutes” a technique he never used which HAS been researched in universities and chiropractic colleges, and published in refereed peer-reviewed journals. Both doctors can, perhaps, be excused for their ignorance.

Unfortunately, the same cannot be said for doctor number three, who elects to maintain a state of contrived ignorance.

Toward a solution

There is no “quick fix.” Fortunately, there are strategies that can be implemented that address these issues. Such a plan should be multi-faceted:

1. Chiropractic college education. Education at the first professional degree level should emphasize critical thinking. Further, it should equip students with a working knowledge of research methods and clinical epidemiology. The “research ideal” should be presented in the context of its value in establishing “real world” clinical strategies consistent with chiropractic philosophy.

2. Continuing education programs. Field practitioners should be afforded opportunities to learn the rudiments of research methods. To make such courses appealing, practical applications to clinical practice should be emphasized. Examples would be ways to evaluate analytical techniques and adjusting procedures.

3. Field involvement in clinical research. Few institutionally based research programs involve active field participation. Some “brand name” techniques make dubious claims based upon faulty research design. Field practitioners and promoters of equipment and techniques should be encouraged to actively participate in well-designed, institutionally based clinical research projects.

4. Financial commitment to research. Chiropractors have often sought political and legal solutions to research problems. We have a rich heritage, and have made tremendous progress in achieving licensure, insurance equality, and other triumphs. But as we approach the 21st century, the scientific community as well as health care consumers are going to demand that we “put up or shut up” when making claims.

The “high rollers” in our profession should give serious consideration to contributing generously to chiropractic research. So should any D.C. interested in the survival of the profession. Please note that I said chiropractic research. This means research concerning the vertebral subluxation and its effects, not the symptomatic treatment of sore backs.

Critical thinking

There are several criteria which I suggest chiropractors use in evaluating analytical procedures:

- Reliability. Reliability is a measure of the ability to reproduce findings. Inter-examiner reliability (also termed objectivity) is a measure of the agreement between two or more examiners. Perfect reliability would result in a coefficient of 1. The higher the coefficient, the greater the reliability.

- Validity. Validity seeks to determine if the measurement in question correlates well with what is claimed to be measured. The technique under evaluation is ideally compared with a “gold standard.” A technique may be reliable, but not valid. In the absence of a “gold standard,” construct validity may be established.

- Sensitivity. If a procedure is claimed to detect a given condition, sensitivity and specificity should be considered. Sensitivity refers to the proportion of persons with the condition who have a positive test result.

- Specificity. Specificity is the proportion of persons without the condition who have a negative test result. In clinical practice, there may be some “trade off” involving sensitivity and specificity.

- Normative data. If a measurement is involved, a normative data base should be available.

- Critical review and publication. Presentation at scientific symposia and/or publication in refereed, peer-reviewed journals means that the paper has undergone critical review. While this does not in any way guarantee the validity of the results, it does mean that other investigators have had the opportunity to critically evaluate the work.

- Duplication of findings. The case for the procedure is strengthened if there is independent corroboration by other investigators.

- Institutional involvement. The case for a procedure is strengthened if it is being taught under the auspices of an accredited chiropractic college. Research under the aegis of a chiropractic college is a sign that the procedure is undergoing objective evaluation.

In considering these criteria, the chiropractor is cautioned to avoid prejudices which may cloud the evaluation. For example, a new technique should not be subjected to a more burdensome standard than an older, more popular technique.

A solid commitment to research and the critical thinking it engenders is essential if the profession is to grow and prosper in today’s scientific environment. The Council on Chiropractic Practice has made that commitment.

Will you join us?

References

1. Fletcher R., Fletcher S., Wagner E.: “Clinical Epidemiology–The Essentials.” Williams and Wilkins. Baltimore, MD, 1982.

2. Elzey, F.: “A First Reader in Statistics.” Brooks/Cole. Monterey, CA, 1974.