by Dr. Christopher Kent

Chiropractic today is undergoing a revolution. Chiropractors who were weaned on a diet of insurance dollars are facing dramatic cuts in the scope of reimbursable services. Their clinical judgements are being challenged by reviewers who have never seen the patient, and who lack accountability for the clinical outcome. Managed care seems the wave of the future, where a paternalistic bureaucracy will dictate practice parameters rather than the attending doctor. More disturbing is the fact that the doctor will be accountable to the managed care entity, not the patient.

It is claimed that only interventions which are demonstrated to be scientifically sound will be included. Unfortunately, few have considered the inherent limitations of science as it is currently practiced. Also neglected is consideration of the misuse of the term “scientific” by individuals seeking to achieve political and economic ends.

Students, practitioners, insurers, government entities, and managed care organizations all long for a level of clinical certainty which simply does not exist. As Weed observed, “Medical schools teach you to memorize what you don’t understand and to solve problems by answering multiple choice questions. Well, patients are not multiple choices…Patients recognize their own uniqueness, even if we do not.” (1)

In an effort to create the illusion of clinical certainty, practice guidelines have been devised. Such guidelines typically rely upon two sources: scientific research and formalized consensus efforts. Specifically excluded are “unsubstantiated clinical or theoretical opinion.” (2)

Before proceeding further, it is necessary to define the term “science.” Science may be defined as “knowledge gained by systematic study and analysis.” (3) Science provides investigators with a useful method of inquiry. Scientific methods have led the healing arts from a world limited by anecdotal observation, myth, and superstition. However, doctors must not lose sight of the fact that science is not the only valid method of inquiry.

Further, it must be realized that while predictability may be considered in designing clinical strategies, all that ultimately matters is what is effective for a given patient in a specific circumstance. Educational institutions producing health care providers must guard against graduating practitioners who are automatons following flow charts rather than thinking, feeling, human beings.

Although science is not an enemy of chiropractic, scientism most certainly is. Scientism limits all fields of human inquiry to contemporary technology. Smith states that scientism “…refers to an uncritical idolization of science — the belief that only science can solve human problems, that only science has value.” Holton observed that “Scientism divides all thought into two categories: scientific thought and nonsense.” (4,5)

What’s wrong with that? A practitioner of science 100 years ago would be forced to declare cosmic waves, viruses, and DNA “unproven” concepts. Such a scientist, bound by the limitations of the technology of the times, would be unable to “prove” or “disprove” the existence of such things. Our hypothetical scientist might go one step further and deny the possibility of their existence, active as some of them may have been in the dynamics of health and disease! Scientism is a scourge which blinds the visionary and manacles the philosopher.

Aldous Huxley was acutely aware of the folly of limiting all human inquiry to the scientific method. He stated, “The real charm of the intellectual life — the life devoted to erudition, to scientific research, to philosophy, to aesthetics, to criticism — is its easiness. It’s the substitution of simple intellectual schemata for the complexities of reality; of still and formal death for the bewildering movements of life.” (6) Science has a place in chiropractic. Scientism does not.

Just as scientism limits human inquiry to available technology, bad science, characterized by questionable research designs, leads to faulty conclusions.

For example, consider the controlled clinical trial. Most devotees of scientism consider evidence derived from controlled clinical trials to be superior to other forms of evidence. For example, the AHCPR guideline for acute low back problems in adults states, “The panel considered randomized controlled trials (RCTs)…to be the acceptable method for establishing the efficacy of treatment methods.” (7)

The randomized clinical trial was first proposed by the British statistician Austin Bradford Hill in the 1930s. (8) Since then, the RCT has received a plethora of praise and a paucity of criticism. The Office of Technology Assessment noted, “objections are rarely if ever raised to the principles of controlled experimentation on which RCTs are based.” (9)

Despite such widespread enthusiasm, A.B. Hill recognized that clinical research must answer the following question: “Can we identify the individual patient for whom one or the other of the treatments is the right answer? Clearly this is what we want to do…There are very few signs that they (investigators) are doing so.” (10)

Herein lies the fatal flaw in RCTs. As Coulter (8) observed, “We consider the controlled clinical trial to be a wrongheaded attempt by man to subjugate nature. Its advocates hope to overcome the innate an ineluctable heterogeneity of the human species in both sickness and health merely by applying a rigid procedure.” Inability of the RCT to deal with patient heterogeneity makes it impossible to use RCT results to determine if a given intervention will achieve a specified result in an individual patient. Friedman stated, “The patient must not be viewed as merely one subject in a population but rather as a unique individual who may or may not benefit from such treatment.” (11)

Coulter (8) summarizes the problem with RCTs succinctly: “The clinical trial is an experiment performed on an unreal, unknown, mysterious entity — an assembly of sick people who have some features in common. Its results cannot be extrapolated to any larger population, and the information cannot be reliably duplicated. What is worse, the results of the trial cannot even be extrapolated to the individual patient, who (not some faceless member of a ‘homogeneous group’) is still the object of medical ministration.”

If the shortcomings of the RCT are disturbing, they pale in comparison to consensus methods of clinical guideline development. Consensus methods have been employed by health care provider groups in an effort to standardize the management of various clinical problems. (12) Such techniques are generally developed within the conceptual framework of the allopathic paradigm. Specifically, diagnostic and/or treatment strategies are developed for specific diseases or clinical syndromes.

Critics of consensus methods have suggested that developing formalized standards of practice leads to the practice of “cookbook medicine.” It is feared that the unique circumstances of the patient, the condition of the patient, and the clinical insights of the attending doctor are subservient to the standards promulgated in the “cookbook.” (13)

In addition to charges of “cookbook medicine,” the selection of participants will significantly affect the outcome of the process. Sackman (14) describes a “halo effect” where participants “bask under the warm glow of a kind of mutual admiration society.”

As Shekelle (13) has observed, acceptance of practice standards has been poor. He cites some significant shortcomings of previous methods of constructing standards. Most commonly, an inadequate review of literature and/or an implicit method of achieving consensus were to blame.

In short, consensus processes frequently result in the promulgation of practice guidelines which are based upon the opinions and biases of the participants in the absence of clinical data. The guidelines produced are a function of the composition of the group. For example, if a group of AK doctors dominated a consensus group, they would likely produce very different guidelines than a group of doctors who practice Chiropractic Biophysics technique.

An example of an opinion masquerading as fact is the frequently repeated recommendation that an “adequate trial of treatment/care” is as follows: “A course of two weeks each of two different types of manual procedures (four weeks total) after which, in the absence of documented improvement, manual procedures are no longer indicated.” (15) In the RAND document, we are told that “an adequate trial of spinal manipulation is a course of two weeks for each of two different types of spinal manipulation (four weeks total), after which, in the absence of documented improvement, spinal manipulation is no longer indicated.” A favorable response to manipulation is defined by RAND as “an improvement in symptoms.” (16)

Objective physiologic measurements and imaging findings do not enter into their definition. What is the scientific basis for such caps? According to RAND, “There exists almost no data to support or refute these values for treatment frequency and duration, and they should be regarded as reflecting the personal opinions of these nine particular panelists.” (16)

Another unfortunate problem is that participants in consensus panels may be called upon to render opinions on procedures for which they have little or no training or expertise beyond a review of literature.

For illustration, let’s briefly consider a technique other than spinal adjusting. Both AHCPR (7) and Mercy rated acupuncture. How many panelists were qualified acupuncturists? And how come they reached rather different conclusions? Did radical changes occur in the practice of acupuncture between the two conferences? Mercy rated acupuncture “promising,” which is the second strongest positive recommendation on its scale. AHCPR stated acupuncture was “not recommended” for treating patients with acute low back problems. Can these opposing recommendations be attributed to a change in the research status of acupuncture? Or are they simply a product of differing personal opinions and biases?

The opinions of a self-appointed mutual admiration society do not change the facts. In the 19th century, the Indiana legislature voted to change the value of Pi to a whole number, as they found the repeating decimal cumbersome and inconvenient. Fortunately, engineers ignored this law when they built bridges! It is equally ridiculous to vote upon the indications and efficacy of clinical procedures in the absence of data.

If clinical guidelines were truly voluntary, as their proponents frequently claim, there would be no problem. However, the following excerpt from former FCLB president Brent Owens, D.C., makes it clear that following practice guidelines may be as voluntary as paying taxes: “We hope that the various licensing jurisdictions will use this document (Mercy) in disciplinary cases involving professional incompetency so that those individuals are not allowed to continue chiropractic practice.”

Are there alternatives? Happily, yes.

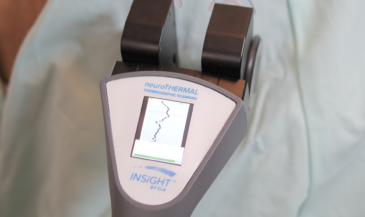

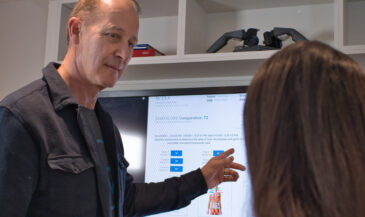

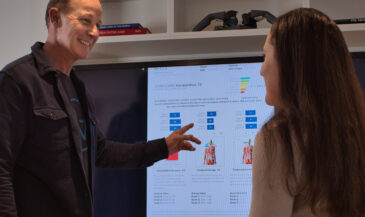

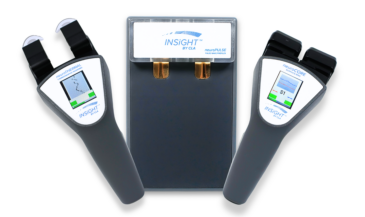

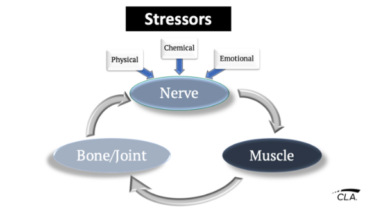

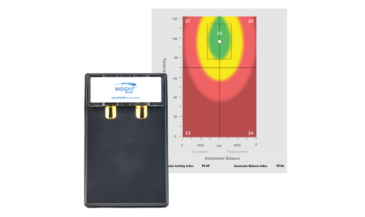

First, clinical decision-making should rely on clearly defined, objective indicators of a favorable outcome. In the case of analysis of the vertebral subluxation complex, this entails assessment of both the structural and neurophysiological aspects of the VSC. Such methodology takes into consideration the unique constitution of each patient, the skill of the doctor, and the nature of the doctor/patient relationship. Use of objective anatomical and physiological outcome assessments, performed throughout a course of professional care, enable the clinician to develop more effective clinical strategies.

Thirty years ago, Platt recognized this, stating “…there are clear clinical or laboratory tests which will rapidly tell whether the treatment is effective…a controlled clinical trial is quite often the wrong way to assess the value of a…treatment.” (17) Recognition of the unique needs, preferences, and objectives of the patient and doctor will enable clinicians to develop meaningful clinical strategies in the context of a free market.

Creating experimental designs which overcome the shortcomings of traditional research methods poses a greater challenge. Traditional allopathic designs which are directed toward specific conditions are inappropriate tools for assessing the value of chiropractic interventions on general health. Assessments of analytic strategies also requires the development of appropriate experimental designs. For instance, a diagnostic tool is considered clinically useful if it is reliable and valid. Reliability refers to repeatability. What’s wrong with that?

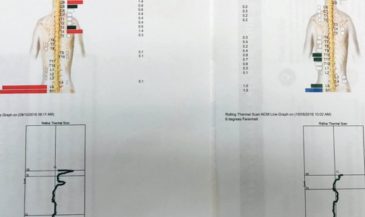

Consider the pattern approach to skin temperature analysis. In this system, reliability would indicate pathology. Lack of repeatable readings would be expected in healthy populations. Therefore, traditional statistical tools for determining reliability, such as the intraclass correlation coefficient, would be inappropriate.

Research designs must be focused on the actions of the unifying systems of the body, with particular emphasis on the nervous system and the immune system. Other indicators of improved well being and quality of life must also be assessed. Future columns will suggest pragmatic research strategies within a chiropractic conceptual framework.

Only a sound research base, taken in concert with the unique characteristics of the individual patient, can result in meaningful clinical guidelines. Machiavellian practice guidelines based upon the untested opinions of individuals who have never seen the patient the guidelines are applied to have no place in chiropractic.

Furthermore, failure to recognize the role of the spiritual and human elements involved the healing process must result in a fragmented, incomplete approach to health care. To stake our claim in the 21st century, we must purge the dogma of scientism from our profession.

References

1. Weed LL: “Dr. Weed sounds off.” Medical Group News, July 1974, page 3. Quoted in Coulter (8).

2. Hansen DT: “Back to basics: determining how much care to give and reporting patient progress.” Topics in Clinical Chiropractic 1(4):1, 1994.

3. “The New Webster’s Dictionary.” Lexicon Publications, New York, 1986.

4. Smith RF: “Prelude to Science.” Charles Scribner’s Sons. New York, NY, 1975. P. 12.

5. Holton G: “The false images of science.” In Young LB (ed): “The Mystery of Matter.” Oxford University Press. London, UK, 1965.

6. Huxley A: Point Counter Point. Quoted in Smith RF, op cit p. 8.

7. Bigos S, Bowyer O, Braen G, et al. “Acute Low Back Pain in Adults.” Clinical Practice Guideline No. 14. AHCPR Publication No. 95-0642. Rockville MD: Agency for Health Care Policy and Research. Public Health Service. U.S. Department of Health and Human Services. December, 1994.

8. Coulter HL: The Controlled Clinical Trial: An Analysis. Center for Empirical Medicine. Washington, DC, 1991.

9. US Congress. Office of Technology Assessment, 1983, page 7. Quoted in Coulter (8).

10. Hill AB: Reflections on the controlled clinical trial. Annals of the Rheumatic Diseases 25:107, 1966.

11. Friedman HS: “Randomized clinical trials and common sense.” Am J Med 81:1047, 1986.

12. Fink A, Kosecoff J, Chassin M, Brook RH: “Consensus methods: characteristics and guidelines for use.” Am J Public Health 74(9):979, 1984.

13. Shekelle P: “Current status of standards of care.” Chiropractic Technique 2(3):86, 1990.

14. Sackman H: “Delphi Critique.” Lexington Books. Lexington, MA, 1975.

15. Haldeman S, Chapman-Smith D, Petersen D, eds. Guidelines for Chiropractic Quality Assurance and Practice Parameters: Proceedings of the Mercy Center Consensus Conference. Aspen Publishers, Inc. Gaithersburg, MD, 1992.

16. Shekelle PG, Adams AH, Chassin MR, et al: The Appropriateness of Spinal Manipulation for Low Back Pain. Indications and Ratings of a Multidisciplinary Expert Panel. RAND Corporation. Santa Monica, CA, 1991.

17. Platt R: “Doctor and patient.” Lancet 1963 (ii):1156. Quoted in Coulter (8).