By Dr. Christopher Kent

Evidencebased clinical practice is defined as “The conscientious, explicit, and judicious use of the current best evidence in making decisions about the care of individual patients…(it) is not restricted to randomized trials and metaanalyses. It involves tracking down the best external evidence with which to answer our clinical questions.” [1]

Despite such a clear admonition, CCGPP has elected to exclude case studies, while basing recommendations on the consensus opinions of panel members. Consensus methods merely formalize the opinions of the participants. Jagoda [2] listed the characteristics of a formal consensus process:

Group of experts assemble

Appropriate literature reviewed

Recommendations not necessarily supported by scientific evidence

Limited by bias and lack of defined analytic procedures

The selection of participants will significantly affect the outcome of the process. Sackman [3] describes a “halo effect” where participants “bask under the warm glow of a kind of mutual admiration society.”

The National Health and Medical Research Council [4] has made it clear that opinions are not evidence. “The current levels (of evidence) exclude expert opinion and consensus from an expert committee as they do not arise directly from scientific investigation.” According to Rosner [5], Bogduk was equally emphatic: consensus or expert opinion is no longer to be accepted as a form of evidence.

In contrast, case studies are gaining respect in the scientific and clinical communities.

Rosner [6] wrote: “Interestingly, what had been considered to be a lower stratum of evidence in quality (observational studies) compared to randomized clinical trials may not be as it has been recently reported that in terms of treatment effects, estimates from observational studies conducted since 1984 do not appear to be consistently larger, or qualitatively different from those obtained in more fastidiously constructed randomized clinical trials. [7] Indeed, the entire pecking order of the quality of scientific clinical evidence, as it appears to have been envisioned by conventional wisdom, has been seriously questioned, such that observations taken in the doctor’s office assume a far greater value in establishing an evidence base with external as well as internal, validity. [8]

“…What all this is simply saying is that the entire range of research and clinical experience needs to be called into play when making a clinical judgment. This would mean that the ‘lowly’ case study takes a rightful place in the pantheon of accumulated evidence supporting a particular type of healthcare intervention. As David Eisenberg once remarked, Chinese medicine endured into the 20th century without documentation from clinical trials because it was substantiated by 3,000 years of case studies. Only 15 percent of what we regard as modern medicine appears to have been supported by any scientific evidence at all, [9] and only one percent has been described as sound. [10].”

Rosner concluded, “Whoever is seeking documentation of clinical practice needs to be critical enough to avoid the lure of the gold standard in assessing evidence, so as not to end up like the three prospectors in ‘The Treasure of Sierre Madre’ who, much to their great horror, find that they have come up with fool’s gold.”

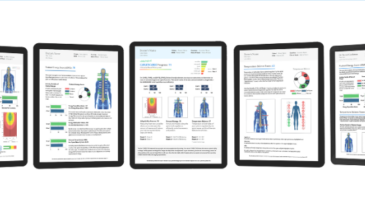

| Rank: | Methodology | Description |

| 1 | Systematic reviews and meta-analyses | Systematic review: review of a body of data that uses explicit methods to locate primary studies, and explicit criteria to assess their quality.

Meta-analysis: A statistical analysis that combines or integrates the results of several independent clinical trials considered by the analyst to be “combinable” usually to the level of re-analysing the original data, also sometimes called: pooling, quantitative synthesis. Both are sometimes called “overviews.” |

| 2 | Randomised controlled trials (finer distinctions may be drawn within this group based on statistical parameters like the confidence intervals) | Individuals are randomly allocated to a control group and a group who receive a specific intervention. Otherwise the two groups are identical for any significant variables. They are followed up for specific end points. |

| 3 | Cohort studies | Groups of people are selected on the basis of their exposure to a particular agent and followed up for specific outcomes. |

| 4 | Case-control studies | “Cases” with the condition are matched with “controls” without, and a retrospective analysis used to look for differences between the two groups. |

| 5 | Cross sectional surveys | Survey or interview of a sample of the population of interest at one point in time |

| 6 | Case reports. | A report based on a single patient or subject; sometimes collected together into a short series |

| 7 | Expert opinion | A consensus of experience from the good and the great. |

| 8 | Anecdotal | Something a bloke told you after a meeting or in the bar. |

An orthodox evidence hierarchy is shown in the accompanying table [11].

The author notes, “Many biomedical discoveries have had to await the development of techniques capable of dealing with populations in a statistical manner. For instance:

*** Simple observation may prove that sewing up a torn vein stops patients bleeding to death.

*** In the 1950s, cohort studies established the link between smoking and lung cancer.

*** Nowadays, to detect small but important differences in survival rates with different combinations of drugs for cancer, large multicentre randomised controlled trials (RCTs) may be needed.

“Techniques which are lower down the ranking are not always superfluous…it is not that one method is better: for a given type of question there will be an appropriate method.”

As Sackett wrote, “External clinical evidence can inform, but can never replace, individual clinical expertise, and it is this expertise that decides whether the external evidence applies to the individual patient at all and, if so, how it should be integrated into a clinical decision. Similarly, any external guideline must be integrated with individual clinical expertise in deciding whether and how it matches the patient’s clinical state, predicament, and preferences, and thereby whether it should be applied.” [1]

Perhaps Baruss [12] said it best, “If we are serious about coming to know something, then our research methods will have to be adapted to the nature of the phenomenon that we are trying to understand…One may need to draw on the totality of one’s experience and not just on that subset that consists of observations made through the process of traditional scientific discovery.”

It is time for CCGPP to stop ignoring evidence which does not fit their usercreated, myopic reality.

References

1. Sackett DL. Editorial. “Evidencebased medicine.” Spine 1998;23(10):1085.

2. Jagoda A. “Clinical policies’ development and applications.” ACEP 2004. http://www.ferne.org

3. Sackman H. “Delphi Critique.” Lexington Books. Lexington, MA, 1975.

4. “How to use the evidence: assessment and application of scientific evidence.” National Health and Medical Research Council. Commmonwealth of Australia. 2000.

5. Rosner AL. “Evidencebased clinical guidelines for the management of acute low back pain: Response to the guidelines prepared for the Australian Medical Health and Research Council.” JMPT 2001;24(3):214.

6. Rosner A. “Realitybased practice: Prospecting for the elusive gold standard.” Dynamic Chiropractic. February 25, 2002.

7. Benson K, Hartz AJ. “A comparison of observational studies and randomized controlled trials.” New England Journal of Medicine 2000; 342(25):18781886.

8. Jonas WB. “The evidence house: How to build an inclusive base for complementary medicine.” Western Journal of Medicine. 2001;175:7980.

9. Smith R. “Where is the wisdom: The poverty of medical evidence.” British Medical Journal 1991;303: 798799, quoting David Eddy, MD, professor of health policy and management, Duke University, NC.

10. Rachlis N, Kuschner C. “Second Opinion: What’s Wrong with Canada’s Health Care System and How to Fix It.” Collins, Toronto (1989).

11. “Systematic reviews: what are they and why are they useful?” University of Sheffield. http://www.shef.ac.uk/scharr/ir/units/systrev/hierarchy.htm

12. Baruss I: “Authentic Knowing. The Convergence of Science and Spiritual Aspiration.” Purdue University Press. West Lafayette, IN. 1996, pp 4041.